This week, I’m sharing some resources I recently read about the past, present and, possible future of AI and ML. What have we done with AI? What can we do? And what can we hope we will be able to do?

A quick history of AI

As a summary:

From ancient Greeks and Romans to nowadays. This is a quick history of AI.

Between AI winters and revivals, the idea of artificial intelligence is very ancient.

We didn’t change the way to do it. We still use statistics and linear algebra mostly. What changed is that now, we have the ways to compute that fast with new efficient and affordable machines.

From 0 to 60 (Models) in Two Years: Building Out an Impactful Data Science Function

The story of success in a data science team. Effort and chance were able to make an impact.

As a summary:

- Pragmatism:

No need for deep learning if a logistic regression can match the performance.

- Self-sufficient team:

We are not dependent on another team, with its other priorities and roadmaps, to make our models available.

- Self-service tools

Those tools empower us, and all the other teams at WW Tech, to essentially run as fast as we want.

- Standardisation:

We wanted to encourage code reuse and standards as much as possible. As such, we made a decision to switch to a monorepo in Git and to develop a common framework for model development. This approach meant that team members could write less boilerplate code and focus on the more valuable aspects such as understanding the data and feature selection, and it meant that they could more easily peer-review (and learn from) each other’s code.

- Strong partnership with the product team

At WW, the relationship between the product and tech organizations is especially tight.

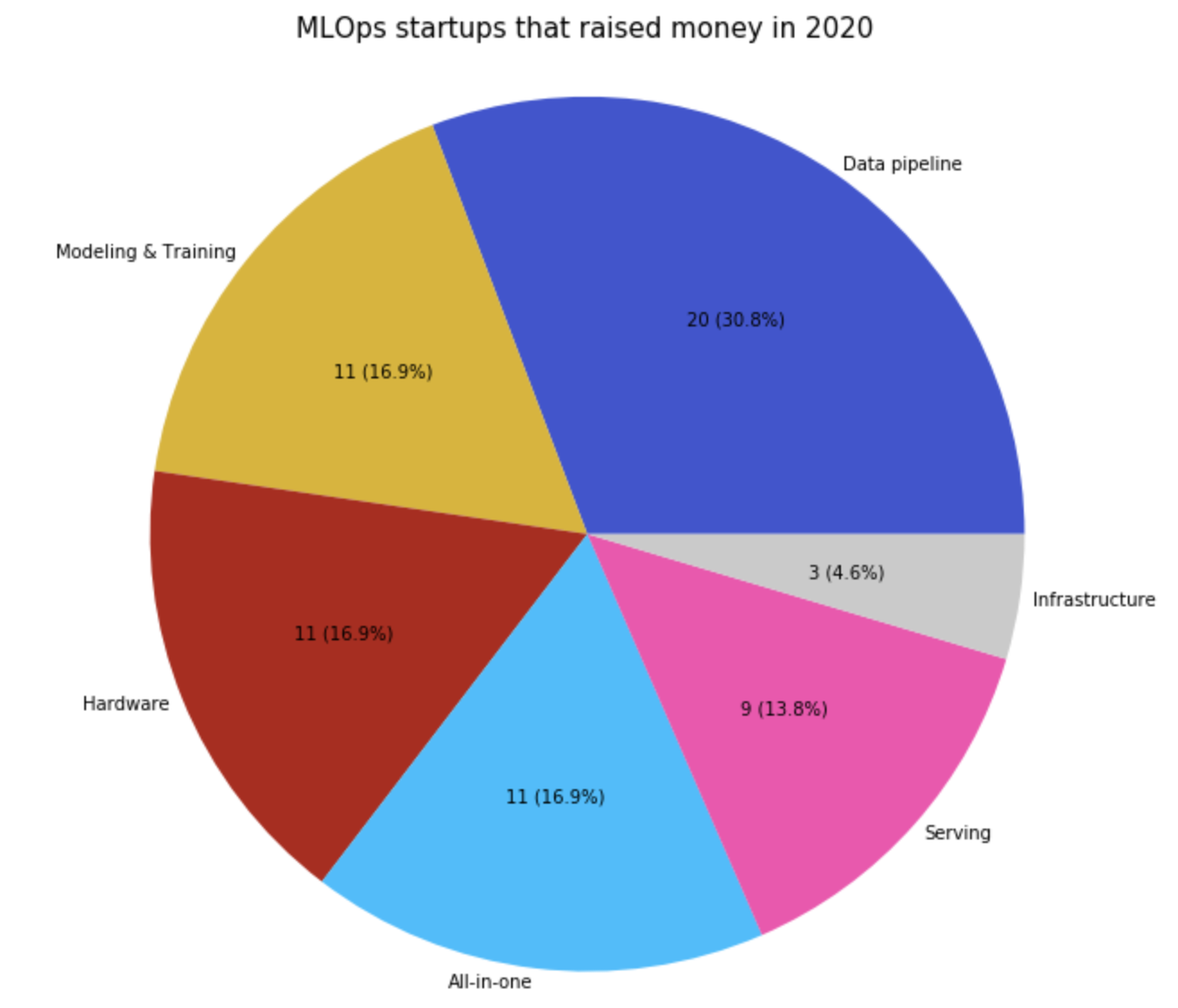

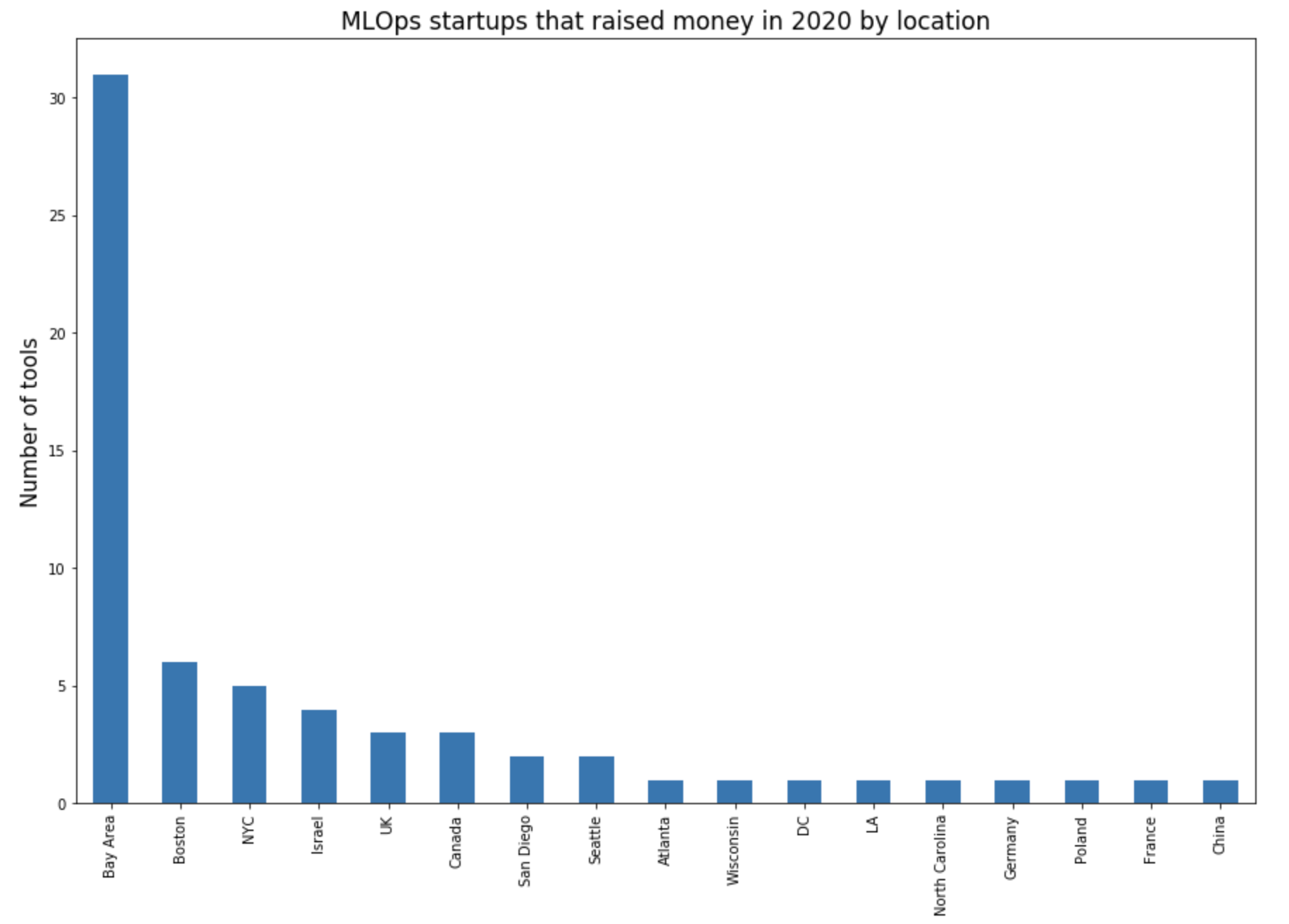

MLOps Tooling Landscape v2 (+84 new tools)

As a summary:

It gives an overall landscape of MLOps tools.

- There’s an increasing focus on deployment

- San Francisco is still the epicenter of machine learning, but not the only hub

- MLOps infrastructures in the US and China are diverging

- More interests in machine learning production from academia: we can think about the paper named Challenges in Deploying Machine Learning: a Survey of Case Studies

This avocado armchair could be the future of AI

As a summary:

What can we do with GPT-3?

Here are two answers:

- Dall.E: creating images from text

- Clip: connecting text and images

Machine learning is going real-time

I’m not sure that there’s a move in machine learning towards real-time. Or at least, I don’t think it’s imminent. From my point of view, it clearly depends on the context where you work.

For me, real-time inference is more and more important. But I’m not sure it’s true for training.

I thought this article interesting if you have to deal with real-time machine learning (real-time inference or training).

Thank you for reading. Feel free to contact me on Twitter if you want to discuss machine learning in real life.